Learn How to Lower Heroku Dyno Latency through Persistent Connections (Keep-alive)

- Last Updated: November 04, 2025

The Performance Penalty of Repeated Connections

Before the latest improvements to the Heroku Router, every connection between the router and your application dyno risked incurring the latency penalty of a TCP slow start. To understand why this is a performance bottleneck for modern web applications, we must look at the fundamentals of the Transmission Control Protocol (TCP) and its history with HTTP.

Maybe you’ve heard of a keep-alive connection, but haven’t thought much about what it is or why they exist. In this post, we’re going to peel away some networking abstractions in order to explain what a keep-alive connection is and how it can help web applications deliver responses faster.

In short, a keep-alive connection allows your web server to amortize the overhead involved with making a new TCP connection for future requests on the same connection. Importantly, it bypasses TCP slow start phase so data in requests are transferred over maximum bandwidth. If you’re already familiar with connection persistence, you understand the magnitude of this change. For everyone else, let’s look at the fundamentals of TCP and the slow start performance bottleneck.

The Solution: Keep-Alive Connections and HTTP/1.0

When you type an address into a browser, it uses Transmission Control Protocol (TCP) to create a connection and a request to a destination website. An HTTP request has headers and a body; these are parts that a Python, Ruby, or NodeJS app will receive, process, and then send back a response.

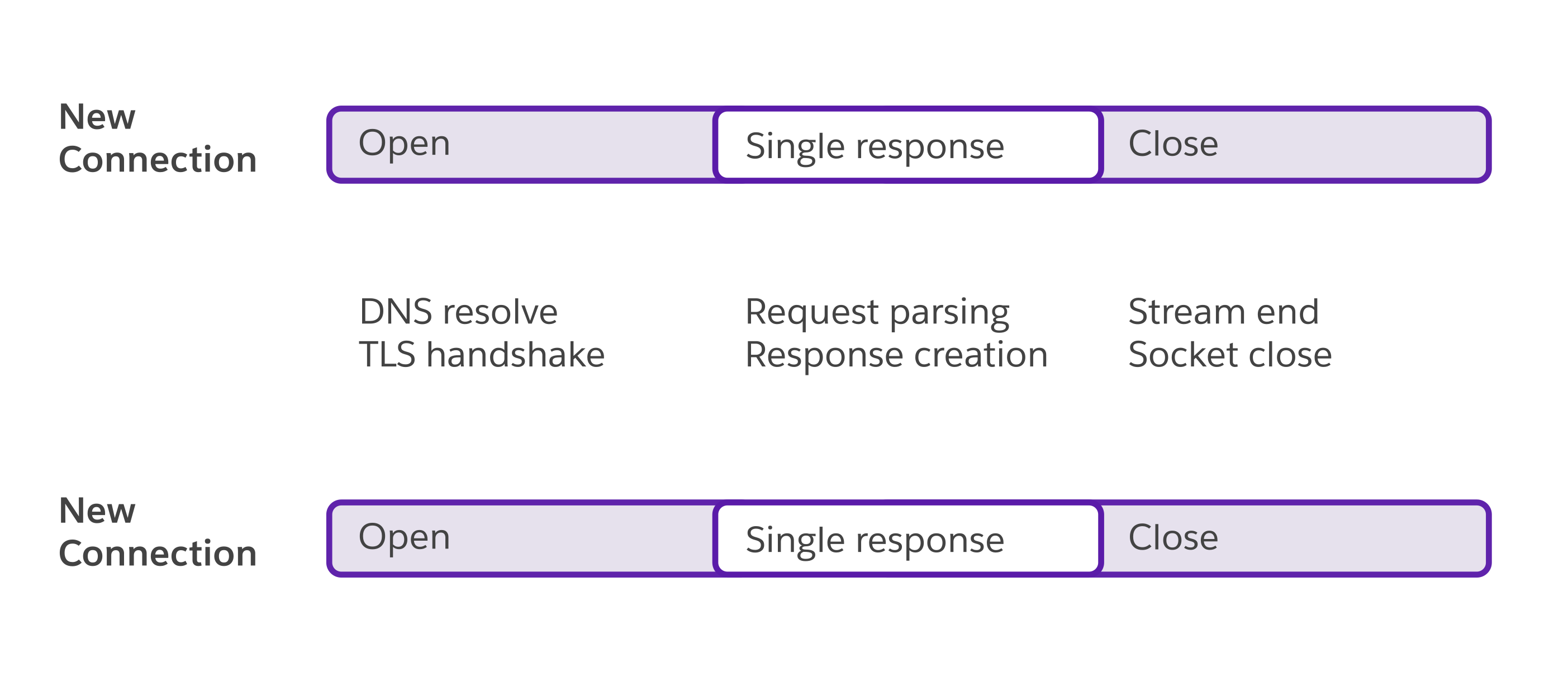

With HTTP/1.0, there was a one-to-one relationship between connections and requests. Every new request required opening a connection, sending the request, and then closing the connection.

This TCP connection is between sockets on computers. Once the connection is established, one server can send data to another in the form of a request or a response. This data queues up on the socket until a program (like a web server) calls accept on it to take the next connection in the queue. HTTP/1.0 was very simple, but it was inefficient due to something called TCP slow start.

TCP slow start

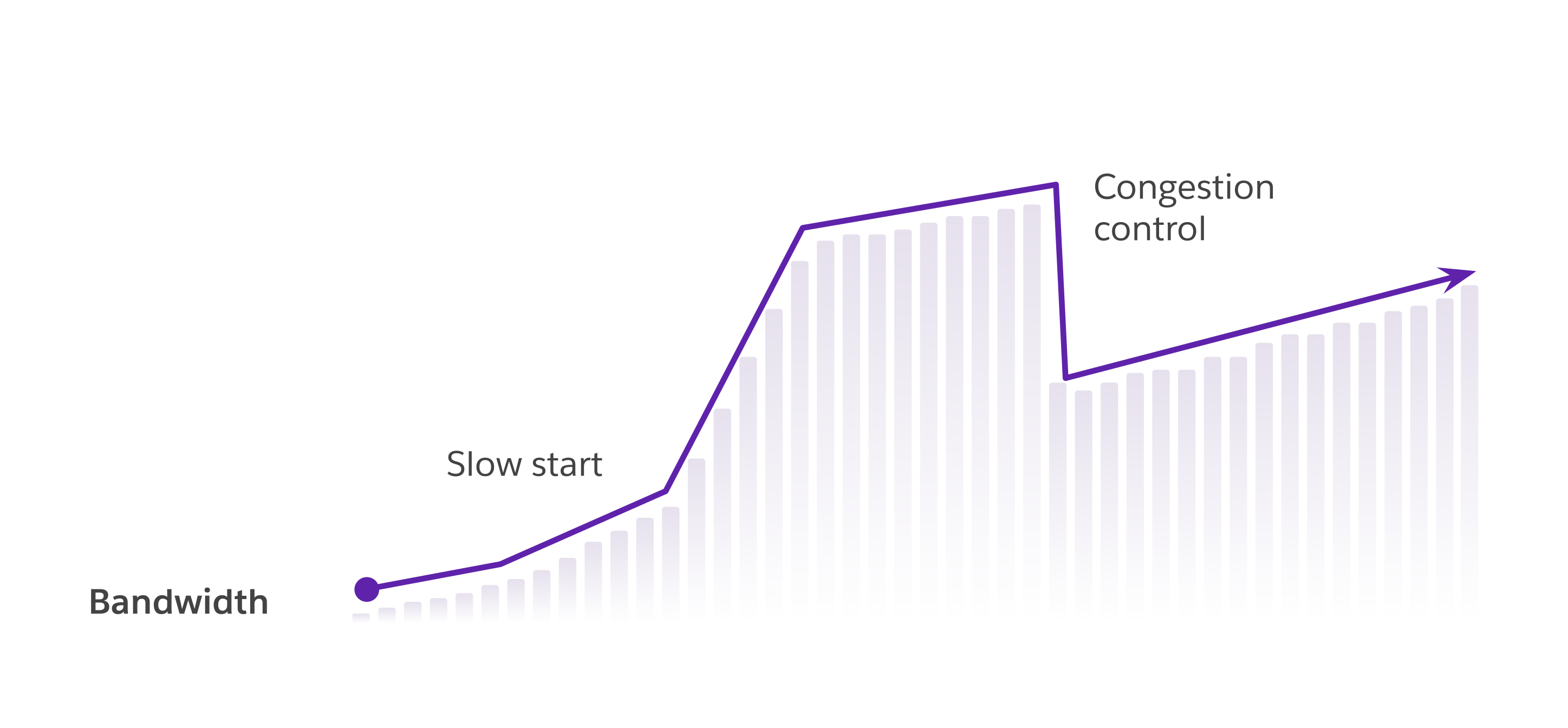

A TCP “connection” isn’t one thing; it’s a stream of packets. The speed (bandwidth) at which the connection operates is dependent on the network speed of the sender, the receiver, and everything in between. If the sender delivers packets too fast, they start getting dropped, which means the sender has to re-deliver those packets.

In a perfect world, the client (sender) would send packets at the maximum possible bandwidth, but not one byte over. In the real world, there’s no way to know that magical number. Even worse, the speed of the network isn’t static and might change. To solve the problem, an algorithm called TCP slow start is used, which dynamically tunes the bandwidth at the same time that data is being sent. You might remember I brought up this algorithm in my article on writing a GCRA rate throttling algorithm. In short, it sends data slowly, and every time that data is received successfully, it increments the speed a bit. If any data is lost, it takes drastic measures to slow down before gradually ramping up again.

TCP slow start is a good thing, but as the name implies, it is slow to start. And since TCP is stateless, every time you start talking to the same server, it has to repeat the process with zero assumptions. So if a browser is making repeated requests to the same endpoint, it has to repeat that TCP slow start multiple times. Not great.

TCP slow start has two parts contributing to this problem. The first is the “slow.” If we could find a faster algorithm, then it would eliminate the problem. We might tune and tweak it some more, but it’s an algorithm that’s already well researched. The other is the “start.” We might not be able to make a faster algorithm, but we only have to pay this cost once: at the start of a connection. If the connection doesn’t close, we can reuse it.

HTTP/1.1 Keepalives and Pipelines

With HTTP/1.1, we have two new tools for this TCP slow start problem: pipeline and keep-alive connections. Both operate on the same basis: decouple requests from connections to reduce the “start” side of the problem.

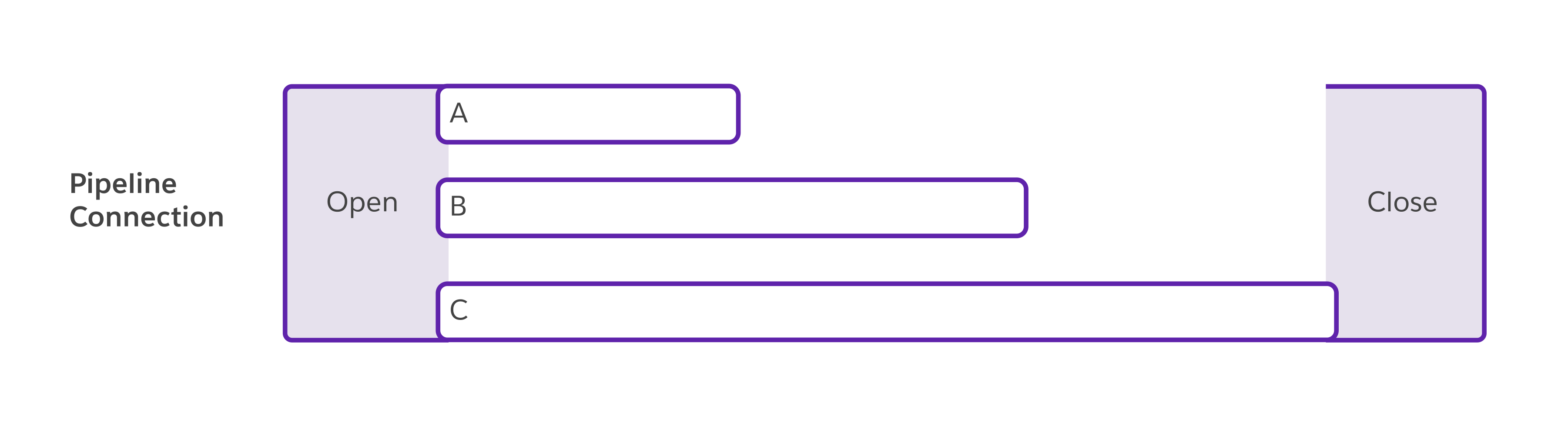

Pipeline connections allow sending multiple requests in one payload. The headers and bodies are constructed ahead of time, concatenated together, and delivered to the server up front. In this scenario, one connection carries multiple requests (A, B, and C), but they’re all delivered at once.

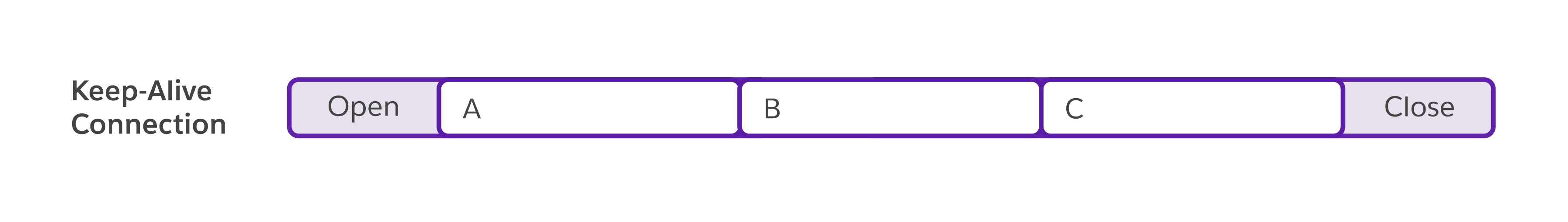

Keep-alive connections usually only carry one request up front (versus a pipeline request), but they tell the server, “once you’re done responding, don’t hang up, I might have another request for you.” This mechanism is very useful. It allows a browser to do things like: keep a pool of connections to the same origin so it can reuse them when downloading images or CSS. Unlike pipeline requests, it doesn’t have to know the use cases ahead of time, so this mechanism is more flexible.

The important thing here is that both pipeline and keep-alive connections work around the TCP slow start bottleneck by not closing the connection. These are features that come from the HTTP/1.1 spec and are fairly well supported by most web servers.

Heroku Router 2.0: Unlock Lower Latency Today

Repeatedly paying the cost of a new TCP connection for every request is a hidden performance tax, that potentially slows down your application. Heroku’s Router 2.0 is already set up to use keep-alive connections by default, eliminating the costly TCP slow start penalty on repeat requests. Now it’s time to ensure your application can fully take advantage of that unlocked bandwidth and latency reduction.

If you previously experienced unfair latency spikes and disabled keep-alives (in config/puma.rb or via a Heroku lab’s feature), we strongly recommend you upgrade to Puma 7.1.0+.

Follow these steps to upgrade today:

1. Install the latest Puma version

$ gem install puma

2. Upgrade your application

$ bundle update puma

3. Check the results and deploy

$ git add Gemfile.lock

$ git commit -m "Upgrade Puma version"

$ git push heroku

Note: Your application server won’t hold keep-alive connections if they’re turned off in Puma config, or at the Router level.

You can view the Puma keep-alive settings for your Ruby application by booting your server with PUMA_LOG_CONFIG. For example:

$ PUMA_LOG_CONFIG=1 bundle exec puma -C config/puma.rb

# ...

- enable_keep_alives: true

You can also see the keep-alive router settings on your Heroku app by running:

$ heroku labs -a <my-app> | grep keepalive

[ ] http-disable-keepalive-to-dyno Disable Keepalives to Dynos in Router 2.0.

We recommend all Ruby apps upgrade to Puma 7.1.0+ today!

The foundation is set for faster experiences. Beyond HTTP/1.1, the new Heroku Router also supports HTTP/2 features, enabling us to deliver new routing capabilities faster than ever before. Start delivering faster, more responsive applications today. Review your web server version and application configurations to enjoy the full power and lower latency of keep-alive connections.

- Originally Published:

- Performance Optimization