In today’s AI-driven world, data is fuel and accuracy is everything. Unfortunately, some organizations still struggle with unreliable, fragmented, and manual data migration processes that slow down the ability to scale and innovate. We sat down with the Salesforce Data Migration Team to talk about how Heroku was instrumental in their evolution, so let’s take a look at what we learned.

Facing challenges head-on

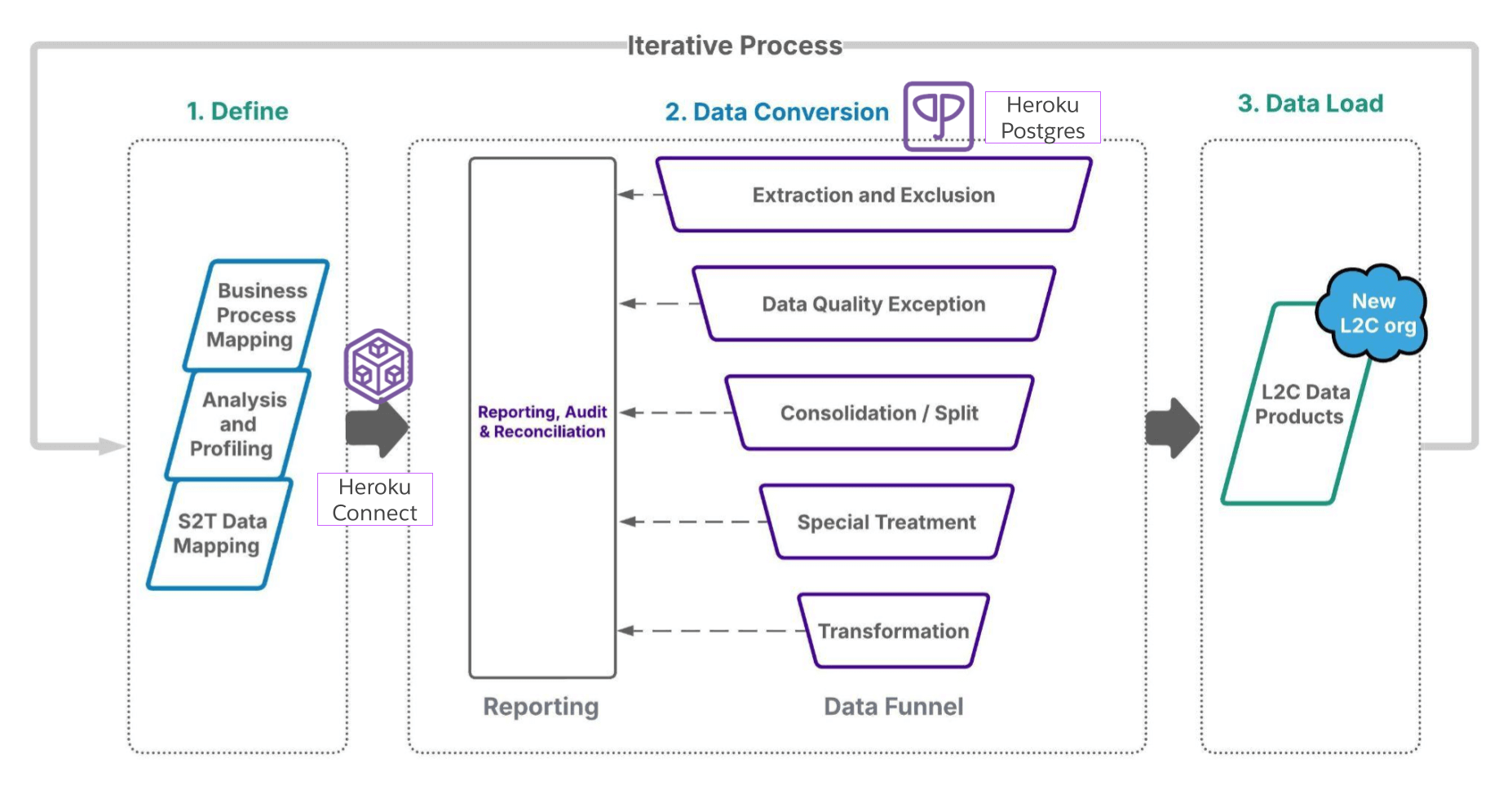

Repeatability is at the heart of operational efficiency, with the need to be able to create one solution that consistently delivers, again and again. The Data Migration Team dedicated themselves to DMaaS, or Data Migration as a Service, which is a Salesforce-on-Salesforce platform for enabling one-time data movement.

However, when starting out, one of the biggest issues was the inability to reuse solutions as each was a full-stack bespoke option built from scratch and then made redundant after use. The time spent on iterations was incredible, and further frustrating was the variability in how reliable the end data results could be, requiring additional time to check and correct.

One of my main goals was to always be able to prove what we did and improve that customer success, not necessarily just for my customers, but also for my team. I didn’t want them to walk away demoralized every time we did a data migration. We would throw in so much time, effort, and energy just to get about 70% right and walk away with such poor customer satisfaction with all of our effort.

– Robert Boles, Salesforce Senior Solutions Architect

Needing solutions, fast

With acquisitions mounting up and stakeholders demanding fast, accurate data, the Data Migration Team continued to struggle with each new instance that required data migration. Since they were the team meant to enable other teams to deliver projects correctly, it was a growing concern. The solution would first and foremost need to be durable so it could be replicated, rather than needing to be rebuilt for each new request. Thankfully, a fix was readily available: Heroku.

Choosing something trusted

Adding Heroku to the process made total sense since it’s under the Salesforce umbrella. But even beyond that, Heroku is cloud-based, supports SQL, and is highly secure thanks to security and compliance features like Private Space, Shield Private Space, SSL, and Rollbacks. Once decided, the team quickly implemented features such as Heroku Connect for data synchronization, Heroku Postgres for the persistent data store along with pgPL/SQL to define data conversion instructions, Airflow to orchestrate the overarching data migration symphony, and MuleSoft API support.

Our very first migration with the toolset, we had zero fallouts and we were within 99.8% financial accuracy. When we walked away, everybody on the program was ecstatic with the result. There was no extended hyper care. In fact, I was so skeptical, I made Vishwa prove it to me several times that we had no fallouts and that we were that accurate.

– Robert Boles, Salesforce Senior Solutions Architect

From months to minutes

Before Heroku, the team would spend upwards of three-months-worth of project hours working to set up a single application processing system, which included creating the new solution and then verifying the data. After Heroku, however, everything changed for the better. The first obvious benefit was in no longer having to create a new, bespoke solution each time, instead letting Heroku establish a framework that could be reused and easily modified to work with new project needs. Next came the data output, which after a little tuning was suddenly near 100% accurate, each and every time.

The results were clear: from weeks of setup (and months of project hours) down to about five minutes of setting up Heroku Connect.

As Customer Zero, we have designed and matured a scalable, in-house data migration solution at Salesforce, built on Heroku. This solution has significantly improved the speed and accuracy of cross-org data integration. It is reusable, highly efficient, and has become a substantial time and resource saver for teams across the organization.

– Sanjeev Arora, VP – Salesforce on Salesforce, Revenue BPG

Extracting value from Heroku

For the team, Heroku became an intermediate layer for persistent data before data is delivered as part of the migration. Looking at its capabilities, they found it had the ability to extract data from the source, transform the data into the structure of the target, ensure the security of the data, ensure availability of the database’s persistent layer, and orchestrate the entire flow of data across the database. And all of Heroku tools involved came right out of the box, meaning minimal additional setup.

We saw that [Heroku Connect] was able to sync the data very efficiently. […] The time that it takes for us to set up a database right now in Heroku, it’s very easy. It’s click, not code. It’s very efficient that way. Setting up an extraction pipeline using Heroku Connect takes us like five minutes to set up compared to us building something from scratch for extraction or loading.

– Vishwajeet Joshi, Salesforce Lead Member of Technical Staff

Fundamental time shift

Another aspect of the team was in acquisitions. Previously, the time from acquisition to full integration in Salesforce could take up to a year, with much of that time dedicated to the developers as they worked through all the means of connecting and maintaining the data pipelines.

The value proposition of deriving value from our M&As has shifted dramatically with Heroku. Time-to-market or time-to-revenue is now measured not in quarters or years, but in weeks or months. Gone are the days of waiting for the tooling to be ready to integrate an acquisition. With Heroku’s aid, our tooling is ready to scale to amalgamate the next M&A.

– Akshay Ajit Dhuri, Salesforce Senior Product Manager

Taking more calculated risks

With this newfound speed and amazing accuracy of data, leadership has taken note and feels even more confident in the team and their ability to make acquisitions. Not only can they accelerate integration, but they can also approach more complex companies. This opens the door for more calculated risks thanks to a more trusted approach to the data and a far more stable pipeline to full integration.

Because of what we have been able to bring to the table, like the Heroku-powered platform, the leadership can now operate with that clarity, speed, and most importantly trust, which is our #1 core value at Salesforce. The data will flow. Systems will align and Day One will feel like we’ve been running together all along. That is the story we have demonstrated with 90 days from deal signing to going live.

– Pooja Tripathi, Salesforce Director Product Line

What’s next for the Data Migration Team?

The Data Migration Team is enjoying their success, with expectations for the future being even more impressive. Setting reasonable expectations has new meaning when you’ve already hit nearly 100% data accuracy with such a rapid turnaround. That just means locking in the best-of-the-best work, which the team is eager to keep doing.

We’re almost victims of our own success. When we started this off, a lot of it was about setting reasonable expectations around statistical, large arrays of data. So, we set up a standard of about 98% accuracy, that’s 3σ, which isn’t bad when you start thinking about all the elements compounding together. And those variabilities all drive each other, every fallout builds on the next fallout. But now that we’re up in the 6σ range, we’re almost victims of our own success because now even though it’s a stated standard of 3σ, they expect us to be hitting four, five, and six and thankfully we just keep cranking right there.

– Robert Boles, Salesforce Senior Solutions Architect

Launch your next project with Heroku

How they did it

- The Data Migration Team used Heroku Connect for bi-directional data synchronization between Heroku Postgres and Salesforce

- Leveraging Heroku built-in security and compliance features like Private Space, Shield Private Space, SSL, and Rollbacks

- Deployed a Python-based backend to Heroku using GitHub integration and automatic deploys for streamlined releases

- Used Heroku Key-Value Store to power real-time alert logic

- Coordinated live incident reporting via Slack bots that triggered updates to Heroku-hosted APIs for instant public alerts

- Scaled new services like a geospatial tile server using Terraform, with autoscaling web dynos and container support for portability

- Leveraged pre-production environments, static IPs, and logging Add-ons to stage safely and monitor performance without DevOps overhead